Natural Language Processing NLP What is it and how is it used?

Moreover, this list also has a curated collection of NLP in other languages such as Korean, Chinese, German, and more. Words, phrases, and even entire sentences can have more than one interpretation. Sometimes, these sentences genuinely do have several meanings, often causing miscommunication among both humans and computers.

Government agencies are bombarded with text-based data, including digital and paper documents. The main way to develop natural language processing projects is with Python, one of the most popular programming languages in the world. Python NLTK is a suite of tools created specifically for computational linguistics. There is now an entire ecosystem of providers delivering pretrained deep learning models that are trained on different combinations of languages, datasets, and pretraining tasks.

Morphological and lexical analysis

Links to our social media pages are provided only as a reference and courtesy to our users. Man Institute | Man Group has no control over such pages, does not recommend or endorse any opinions or non-Man Institute | Man Group related information or content of such sites and makes no warranties as to their content. Man Institute | Man Group assumes no liability for non Man Institute | Man Group related information contained in social media pages. Please note that the social media sites may have different terms of use, privacy and/or security policy from Man Institute | Man Group. In future work, possible extensions could be to apply our method to domains and text types outside of the training data and to include other types of entities in our analysis, such as products and countries of origin.

How many natural languages are there?

While many believe that the number of languages in the world is approximately 6500, there are 7106 living languages.

Both languages contain important similarities, such as the differentiation they make between syntax and semantics and the existence of a basic composition. Essentially, the two types were created to communicate ideas, examples of natural languages expressions, and instructions. Finally, projects such as FirstVoices intend to revitalise indigenous languages in British Columbia, Canada, by preserving language data and allowing its community to access it.

The University

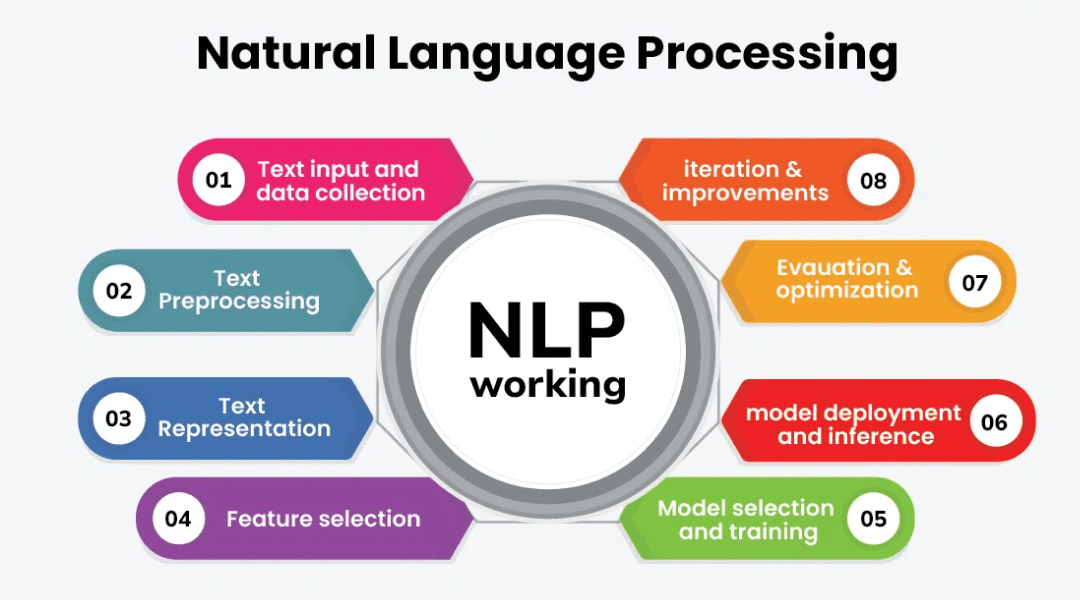

For named entity recognition, this deals with open class words such as person, location, date or time or organisation names. A more general version of the NLP pipeline starts with speech processing, morphological analysis, syntactical analysis, semantic analysis, applying pragmatics, finally resulting in a meaning. In syntactic analysis, we use rules of formal grammar to validate a group of words. The syntactic analysis deals with the syntax of the sentences whereas, the semantic analysis deals with the meaning being conveyed by those sentences. NLP deals with human-computer interaction and helps computers understand natural language better. The main goal of Natural Language Processing is to help computers understand language as well as we do.

- Parsing is all about splitting a sentence into its components to find out its meaning.

- In addition to taking into account factors like working conditions and water currents, it would offer pertinent results, such as those from a hyperbaric chamber.

- Automatic speech recognition is one of the most common NLP tasks and involves recognizing speech before converting it into text.

- While the first one is conceptually very hard, the other is laborious and time intensive.

- That number will only increase as organizations begin to realize NLP’s potential to enhance their operations.

- For example, we are doing sentiment classification, and we get a sentence like, “I like this movie very much!

It could be something simple like frequency of use or sentiment attached, or something more complex. The Natural Language Toolkit (NLTK) is a suite of libraries and programs that can be used for symbolic and statistical natural language processing in English, written in Python. It can help with all kinds of NLP tasks like tokenising (also known as word segmentation), part-of-speech tagging, creating text classification datasets, and much more. Today’s natural language processing systems can analyze unlimited amounts of text-based data without fatigue and in a consistent, unbiased manner. They can understand concepts within complex contexts, and decipher ambiguities of language to extract key facts and relationships, or provide summaries.

Step 8: Create Or Select Your Desired Prompt

Meanwhile tools – for businesses, organizations, and individuals – have exploded. Offerings such as the NLTK (Natural Language Tool Kit), enable anyone with a personal computer and minimal coding knowledge examples of natural languages to conduct their own NLP – and develop their own chatbots. Natural language processing can be structured in many different ways using different machine learning methods according to what is being analysed.

What Are Fintech Jobs – Robots.net

What Are Fintech Jobs.

Posted: Tue, 19 Sep 2023 12:19:38 GMT [source]

The parsing process will still be complete as long as all the consequence of adding a new edge to the chart happen, and the resulting edges go to the agenda. This way, the order in which new edges are added to the agenda does not matter. A more flexible control of parsing can be achieved by including an explicit agenda to the parser. The agenda will consist of new edges that have been generated, but which yet to be incorporated to the chart.

Taking each word back to its original form can help NLP algorithms recognize that although the words may be spelled differently, they have the same essential meaning. It also means that only the https://www.metadialog.com/ root words need to be stored in a database, rather than every possible conjugation of every word. We won’t be looking at algorithm development today, as this is less related to linguistics.

By looking at the wider context, it might be possible to remove that ambiguity. Word disambiguation is the process of trying to remove lexical ambiguities. A lexical ambiguity occurs when it is unclear which meaning of a word is intended. A constituent is a unit of language that serves a function in a sentence; they can be individual words, phrases, or clauses. For example, the sentence “The cat plays the grand piano.” comprises two main constituents, the noun phrase (the cat) and the verb phrase (plays the grand piano). The verb phrase can then be further divided into two more constituents, the verb (plays) and the noun phrase (the grand piano).

The pros and cons of using GitHub Copilot for software development [survey results]

This is why we’ll discuss the basics of NLP and build on them to develop models of increasing complexity wherever possible, rather than directly jumping to the cutting edge. Context-free grammar (CFG) is a type of formal grammar that is used to model natural languages. CFG was invented by Professor Noam Chomsky, a renowned linguist and scientist. CFGs can be used to capture more complex and hierarchical information that a regex might not. To model more complex rules, grammar languages like JAPE (Java Annotation Patterns Engine) can be used [13]. JAPE has features from both regexes as well as CFGs and can be used for rule-based NLP systems like GATE (General Architecture for Text Engineering) [14].

- An effective user interface broadens access to natural language processing tools, rather than requiring specialist skills to use them (e.g. programming expertise, command line access, scripting).

- Some datasets you may want to look at in finance – such as annual reports or press releases – are carefully written and reviewed, and are largely grammatically correct.

- Structural ambiguity, such as propositional phrase (PP) attachment ambiguity, where attachment preference depends on semantics (e.g., “I ate pizza with ham” vs. “I ate pizza with my hands”).

- This not only puts the firm in the driving seat but also reduces concerns regarding data ownership, with the firm having full authority over their data.

- Pragmatic analysis refers to understanding the meaning of sentences with an emphasis on context and the speaker’s intention.

If a system does not perform better than the MFS, then there is no practical reason to use that system. The MFS heuristic is hard to beat because senses follow a log distribution – a target word appears very frequently with its MFS, and very rarely with other senses. The distributional hypothesis can be modelled by creating feature vectors, and then comparing these feature vectors to determine if words are similar in meaning, or which meaning a word has.

Coupled with sentiment analysis, keyword extraction can give you understanding which words the consumers most frequently use in negative reviews, making it easier to detect them. Text analytics is a type of natural language processing that turns text into data for analysis. Learn how organisations in banking, health care and life sciences, manufacturing and government are using text analytics to drive better customer experiences, reduce fraud and improve society. The COPD Foundation uses text analytics and sentiment analysis, NLP techniques, to turn unstructured data into valuable insights.

Another way is to consider N-grams that consist of words frmo some parts-of-speech (e.g., nouns, adjectives, etc) only, or to consider N-grams on which some part-of-speech filter applies. The amount of semantic ambiguity explodes, and syntactic processing forces semantic choices, leading to much backtracking. It is also difficult to engineer delayed decision making in a processing pipeline. Tabulated parsing avoids recomputation of parses by storing it in a table, known as a chart, or well-formed substring table. In sentences where both modification and complementation are possible, then world or pragmatic knowledge will dictate the preferred interpretation. When there is not strong pragmatic preference for either readings, then complementation would be preferred.

When it comes to figurative language—i.e., idioms—the ambiguity only increases. Let’s first introduce what these blocks of language are to give context for the challenges involved in NLP. Let’s start by taking a look at some popular applications you use in everyday life that have some form of NLP as a major component. To stay one step ahead of your competition, sign up today to our exclusive newsletters to receive exciting insights and vital know-how that you can apply today to drastically accelerate your performance. Learn why SAS is the world’s most trusted analytics platform, and why analysts, customers and industry experts love SAS. NLP offers many benefits for businesses, especially when it comes to improving efficiency and productivity.

This NLP Python book is for anyone looking to learn NLP’s theoretical and practical aspects alike. It starts with the basics and gradually covers advanced concepts to make it easy to follow for readers with varying levels of NLP proficiency. The aforementioned are the best suitable languages for natural language processing in general. Here, we would like to give a detailed explanation about one of the best languages. Yes, we are going to enumerate the python programming language for the ease of your understanding.

On the other hand, lexical analysis involves examining lexical – what words mean. Words are broken down into lexemes and their meaning is based on lexicons, the dictionary of a language. For example, “walk” is a lexeme and can be branched into “walks”, “walking”, and “walked”. Morphological and lexical analysis refers to analyzing a text at the level of individual words.

Despite this sombre outlook, there is an upside to the massive encroachment of technological progress in our lives. Artificial Intelligence has been used to preserve endangered languages in a variety of projects that attempt to use different tools to keep these linguistic communities alive. Dan Schofield from NHS Digital was tasked with identifying meaningful groupings from the free-text input of a nationwide dataset of appointments in GP practices.

What is organic language?

The metaphor “organic” language learning refers to learning that is natural, without being forced or contrived, and without artificial characteristics.